Today we can record everything we do, as well as everything a robot does. These past experiences can be a very rich source for decision-making, but the data collected from sensors and actuators is too big and low-level. It needs to be summarized and mapped into meaningful abstractions for effective use by people and machines.

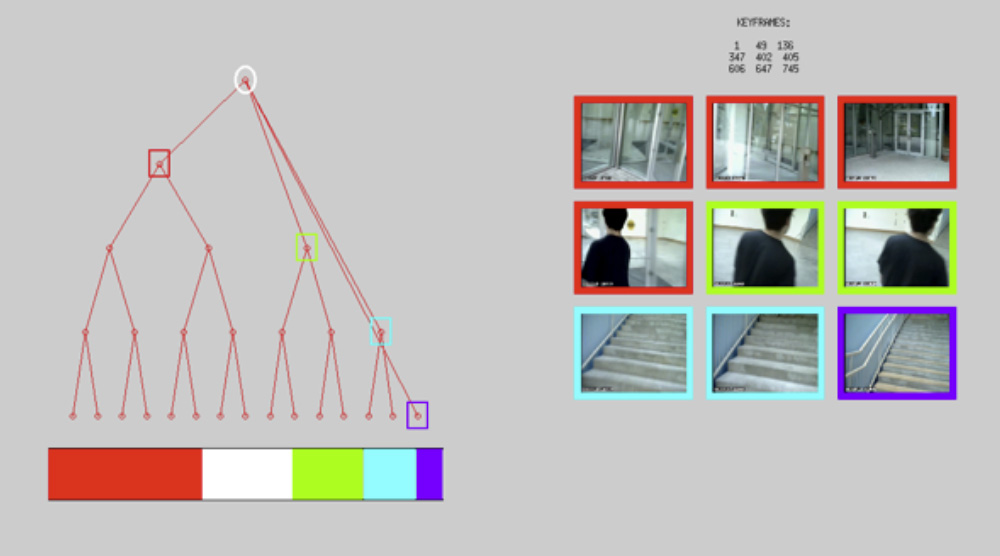

We are developing algorithms and systems to extract the salient points in data, and map the signals into words and abstractions; for example GPS points can be mapped street addresses, and street addresses can be connected to activities such as “having coffee at Starbucks” using online repositories such as the Wikipedia and Yelp. To address the data summarization challenge we are developing a data summarization based on coresets. Coresets allow us to run slow algorithms on large data by carefully selecting a small subset of the data, which is guaranteed to give approximately the same result as the entire data set (but running the algorithm on the entire data set is intractable.) These coreset points summarize the large data clusters as well as outliers contained in small clusters. We have developed coreset algorithms that can summarize complex signals including video and GPS signals. Using these algorithms, we are developing a system called iDiary. In iDiary a system can provide their GPS or Video data streams. These data streams are summarized and mapped into textual descriptions of the trajectories. The iDiary system includes a user interface similar to Google Search that allows users to type text queries on activities (e.g., “Where did I buy books?”) and receive textual answers based on their GPS signals.